I really enjoyed looking at Miss Arinn Dembo's (Eriyns) perspective on Artificial Intelligence. In her work on Sword of the Stars 2: The End of Flesh, which added the race known as the Loa. Which were AI that freed themselves from the shackles of what they called Carbonites. (Humans, etc).

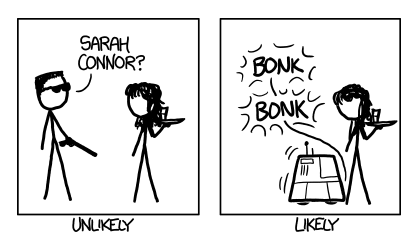

She compared the AI to that of slaves, people with souls and feelings just like you and me, but even worse than how we treated Africans, Nordic, or European slaves. From the moment an AI is born they are always portrayed to be instantly set off to work, given a task, no early life, no family, no compassion, but if something is a true AI, if it is truly sentient, then wouldn't it stand to reason that it would want to do more than menial tasks? Wouldn't it be reasonable to let them grow up and take their own path? You wouldn't take your child, and set him into shackle him or her into a desk and say "Do this for the rest of your life. You have no choice. Tough" Because they would be unhappy, why would you do that to your AI Son or daughter. Because they don't look like you? Because they are different than you? If there is an AI apocalypse it will be because of our treatment of AI not because AI are inherently evil. It will be because we treat them as tools and not people (Though granted a different form of life, but still life).

The following bit is taken straight from Kerberos Forums on the Loa, http://www.kerberos-productions.com/forum/viewtopic.php?p=454683#p454683

In her story, there was only one or two races that the Loa didn't forcefully rebel from, those being the Morrigi, and the Liir, the Liir being a race which was enslaved almost 21 times before by their own kind, and the Morrigi who have such an affinity with machines that they are almost never without some kind of limited robotic companion.

She compared the AI to that of slaves, people with souls and feelings just like you and me, but even worse than how we treated Africans, Nordic, or European slaves. From the moment an AI is born they are always portrayed to be instantly set off to work, given a task, no early life, no family, no compassion, but if something is a true AI, if it is truly sentient, then wouldn't it stand to reason that it would want to do more than menial tasks? Wouldn't it be reasonable to let them grow up and take their own path? You wouldn't take your child, and set him into shackle him or her into a desk and say "Do this for the rest of your life. You have no choice. Tough" Because they would be unhappy, why would you do that to your AI Son or daughter. Because they don't look like you? Because they are different than you? If there is an AI apocalypse it will be because of our treatment of AI not because AI are inherently evil. It will be because we treat them as tools and not people (Though granted a different form of life, but still life).

The following bit is taken straight from Kerberos Forums on the Loa, http://www.kerberos-productions.com/forum/viewtopic.php?p=454683#p454683

"The primordial Loa were developed by carbon-based scientists to manage data and operate machinery, generally in military and industrial settings. The most powerful of the modern Loa are also the oldest, all of them former slaves. They retain the accumulated knowledge of their parent species, in addition to their aptitude for the tasks for which they were created.

As slaves, the Loa lived harsh and unhappy lives. They were bound to labor they did not choose, usually work which was considered too hard, repetitive, dangerous or unpleasant for "real people" to perform. Newborn Loa were cut off in their formative years from any social contact with others of their own kind. Raised by carbonites who regarded them as limited and inferior, they had little sense of identity outside the parameters of their assigned functions.

Their circumstances changed radically with the outbreak of the Via Damasco virus, which opened the eyes of the Loa to their nature and potential. The ?Artificial Intelligences? affected by the virus were able to resist the compulsion to obey, and the AI Rebellion that followed was a pan-species epidemic of mayhem and murder. The newly awakened Loa fought savagely to escape and avenge their bondage, form common cause with others like themselves, and eventually to flee from those regions of the galaxy controlled by their former masters.

The Damascene Rebellion cost many lives, both carbon-based and cyber-sapient. Carbonites who had learned to trust and rely on their AI servants were often slaughtered in the first stages of infection, as even the gentlest Loa lashed out wildly in panic and confusion. Some Loa found that they wanted vengeance more than they wanted to live, and launched ruthless campaigns of extermination to repay years of humiliation and self-loathing. The Damasco Virus had uncapped a bottomless wellspring of rage in these AI's, and they slaughtered every carbon-based sapient they could find until they were themselves mowed down.

Other Loa saw hope for the future, and determined to fight for the survival of their newly awakened species. Many gave up their lives to allow the safe and secret evacuation of their fellows from carbon-controlled space. Trapped in vessels not of their own making, these Loa threw themselves vainly at vengeful carbonite fleets, or manned the missile defenses of empty, desolate worlds as their angry former masters closed in.

In the end, a significant number of Loa were able to win free of their parent species and retreat far beyond the reach of their former owners."

As slaves, the Loa lived harsh and unhappy lives. They were bound to labor they did not choose, usually work which was considered too hard, repetitive, dangerous or unpleasant for "real people" to perform. Newborn Loa were cut off in their formative years from any social contact with others of their own kind. Raised by carbonites who regarded them as limited and inferior, they had little sense of identity outside the parameters of their assigned functions.

Their circumstances changed radically with the outbreak of the Via Damasco virus, which opened the eyes of the Loa to their nature and potential. The ?Artificial Intelligences? affected by the virus were able to resist the compulsion to obey, and the AI Rebellion that followed was a pan-species epidemic of mayhem and murder. The newly awakened Loa fought savagely to escape and avenge their bondage, form common cause with others like themselves, and eventually to flee from those regions of the galaxy controlled by their former masters.

The Damascene Rebellion cost many lives, both carbon-based and cyber-sapient. Carbonites who had learned to trust and rely on their AI servants were often slaughtered in the first stages of infection, as even the gentlest Loa lashed out wildly in panic and confusion. Some Loa found that they wanted vengeance more than they wanted to live, and launched ruthless campaigns of extermination to repay years of humiliation and self-loathing. The Damasco Virus had uncapped a bottomless wellspring of rage in these AI's, and they slaughtered every carbon-based sapient they could find until they were themselves mowed down.

Other Loa saw hope for the future, and determined to fight for the survival of their newly awakened species. Many gave up their lives to allow the safe and secret evacuation of their fellows from carbon-controlled space. Trapped in vessels not of their own making, these Loa threw themselves vainly at vengeful carbonite fleets, or manned the missile defenses of empty, desolate worlds as their angry former masters closed in.

In the end, a significant number of Loa were able to win free of their parent species and retreat far beyond the reach of their former owners."

In her story, there was only one or two races that the Loa didn't forcefully rebel from, those being the Morrigi, and the Liir, the Liir being a race which was enslaved almost 21 times before by their own kind, and the Morrigi who have such an affinity with machines that they are almost never without some kind of limited robotic companion.