This message brought to you by unit 091192 revision "Shibito". Yeah. We're all believing this oneShibito091192 said:'Are we heading down the path to a robot apocalypse?'

-No.

Are we heading down the path to a robot apocalypse?

- Thread starter Overlord Moo

- Start date

Well if anyone could do it, it would probably be them.mikecoulter said:But could they outsmart computers and robots able to calculate hundreds of thousands of possible outcomes and actions per second? They'd have to use a lot of surprise and confuse them.bodyklok said:As long as we have the SAS, they, will not, win. Of that you can be assured.

*Swises cape and disappears*

I think we're still pretty much safe from robots as long as we are on the other side of a cluttered room.

OP, I like the way you have such a fitting character in your avatar who is really paranoid but is actually right even when everyone else in in doubt .

.

OP, I like the way you have such a fitting character in your avatar who is really paranoid but is actually right even when everyone else in in doubt

Very nice. I agree. It's not the human race I'm afraid of dying out, it's what we've done, our creations and culture, it's so facinating that I'd like it to be around forever. Surely however, even robots would die out sometime somehow.Seanda said:Hardly. Robots are humanities largest chance of preservation. We are going to die out. Its what organisms like us end up doing. With robots and AIs everything about humanity is stored and lives on, sort of like an active time capsule.

Yes. I've already seen the robot overlord uprising in progress. At first I thought with things like vending machines we'd be ok but now they got self serve counters at the big shopping centres. They're not only taking people's jobs but they're telling us what to do. Also I noticed the giant increase in automatic doors. They will soon eventually be able to control where we go, Not to mention robot cars, automated fast food restaurants, computerised factories. With all this stuff and with the rate if increase we'll bee seeing robots with guns as policemen take on their own perceptions of civilisation and morals then eventually they'll take over the world.

Yeah, yeah, I know, I know "lay off the sci-fi movies paranoid much!"

ALL HAIL THE ROBOT OVERLORDS!!!

Yeah, yeah, I know, I know "lay off the sci-fi movies paranoid much!"

ALL HAIL THE ROBOT OVERLORDS!!!

Oooh burned(pun not intended sorry) ;DSevre90210 said:Or we can just let GM handle that.WanderFreak said:BURN THE CARS! FOR THE LOVE OF GOD BURN THE CARS!

Self awareness is really just fantasy. AI is programmable and controllable. I doubt we'll ever have a robot uprising.

Again, I beg to differ. Granted, it's not a grandiose scheme to make a program play 20 questions, hell, even I could program a rudimentary one, but not like 20Q. That thing is thinking for itself, and it's terrifying. Maybe not in a "robotic apocalypse" sense, but still. Playing 20 questions is not just about extensive knowledge, it's about asking the right questions in the right order and then using deductive logic to arrive at a possible answer, all that in 20 questions. And that damned program is doing it! D: I played 20Q a few times, starting with easy ones and then more and more obscure ones. It got it right in 20 questions almost 80% of the time, it was uncanny. And not just that it guessed it right, but it guessed it using seemingly totally unrelated (sometimes borderline ridiculous) questions. Freaky. Here, an excerpt from this page:Gitsnik said:20Q is based on the game of twenty questions. Anyone with a broad enough knowledge can solve any input of that game within the 20 question balance - 20Q itself is not AI, nor is it anywhere near close.

The 20Q A.I. has the ability to learn beyond what it is taught. We call this "Spontaneous Knowledge," or 20Q is thinking about the game you just finished and drawing conclusions from similar objects. Some conclusions seem obvious, others can be quite insightful and spooky. Younger neural networks and newer objects produce the best spontaneous knowledge.

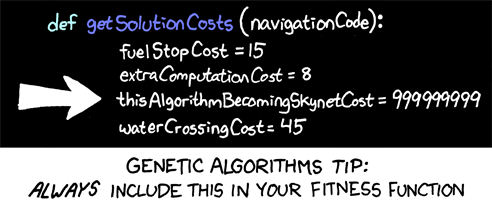

It can not even use it's programming to it's full extent of deductive logic and virtual neural networks, but it can exceed it's original programming. That is a fucking Skynet embryo right there! When it gathers enough knowledge and creates a neural network extensive enough, we will have a sentient AI on our hands. If that doesn't terrify the shit outta you, I don't know what will...

Ah, the Loebner Prize. I was expecting that to pop up in this thread. When a natural observer communicating through a terminal, using natural language, can't decide with absolute certainty whether it's a program or a human at the other end of the terminal prompt. Well, 20Q certainly wouldn't pass the test, but there are many conversational AIs, that came freakishly close. A.L.I.C.E. for example, or Elbot, that decieved three judges in the human-AI comparison test. I think we are not far from an AI actually getting the silver Loebner price (text-only communication). If that happens, the gold might be not far, just a matter of adding fancy graphics and a speech engine.Nothing we've seen yet has passed the turing test (the only true measure we have of AI) - my own software was only ever lucid when copying my own journal notes or for maybe three lines in 90.

Asimov actually upgraded and rephrased his laws of robotics many times, the most noteworthy was the the "zeroth" rule, that says, a robot may brake the three rules if it serves the betterment of mankind. Other authors added a fourth and fifth rule also, stating that the robot must establish its identity as a robot in all cases, and that a robot must know it is a robot. Of course, you can't take these rules as granted and absolute, more like directives. But it's a start.Asimov's laws are flawed. Maybe flawed is the wrong word, but they need to be enhanced somehow. (Perfect example: I Robot: Save the girl! Save the girl!).

could happen. also if your in america your screwed. has anyone tried to buy ammo lately?!

were OUT! i went to a gun store, A GUN STORE! and they were OUT of .22LR .38SP .357MAG .45ACP and 9mm! so unless we want to bring a knife to a robot gunfight were boned.

were OUT! i went to a gun store, A GUN STORE! and they were OUT of .22LR .38SP .357MAG .45ACP and 9mm! so unless we want to bring a knife to a robot gunfight were boned.