EA Exec: Xbox One and PS4 Are "A Generation Ahead" Of PC

- Thread starter Andy Chalk

- Start date

He probably meant the consoles are more powerful than a PC built with the same money, or just the average PC. You can, theorically, build a high end PC that would give the consoles a run for their money (heh), but I doubd they are "a generation" ahead.

PS: Kinda off topic but "XBone", first time I see it XD.

PS: Kinda off topic but "XBone", first time I see it XD.

I see a sliver of truth in what he says. I mean I'd believe him even more if he weren't a gaming executive, but just on what he said its definitely possible. We just have to wait and see.

My God, a system like that would beat out a PlayStation 5. If they're saying "A generation ahead of PC", they must surely mean, "for the same price point". Because PCs are ways ahead of the hardware that is in the XBOX One/PlayStation 4. The exact same hardware and/or power that is in the PlayStation 4/XBOX One will run you about $600 right now. Which is more than they're likely to sell these systems. But that won't last long, and before too long, you'll be able to afford this hardware for $300. For goodness sake, Intel's Haswell series and and the HD 8000 series are right around the corner.Gorfias said:

And by the time these systems are already released, the Haswell and HD 8000 series really will be released, and for several months. Generation ahead my foot.

Also, why would you use a Rosewill PSU with such expensive hardware? I would never trust a Rosewill to a Titan. Enermax or bust at that level. Maybe SeaSonic. Definitely nothing less.

He would have, but he already had two holes full, and still needed the remaining one to speak.Grabehn said:Oh look at the guy trying to get attention, I'm surprized he didn't talk about the Wii U to lick their boots too since they said they were not going to develop for that platform and now they do.

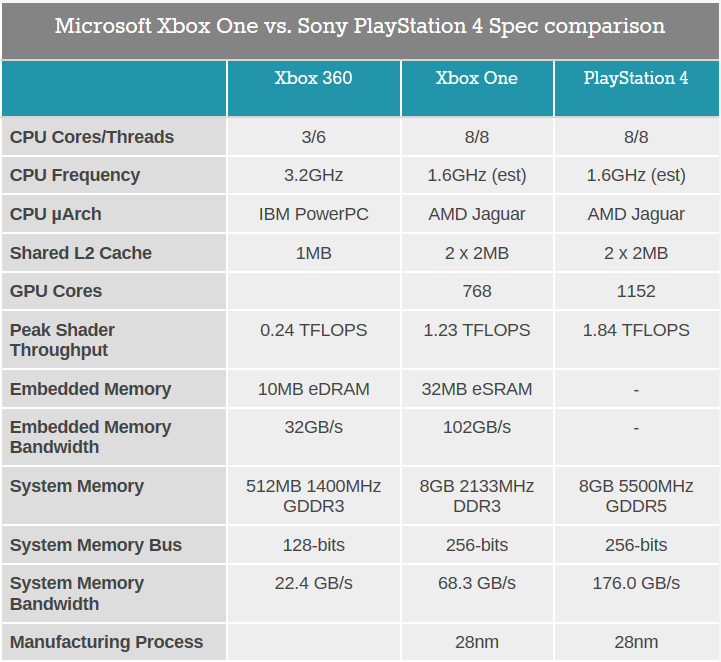

Dexter111 said:Anandtech had an interesting article on that: http://www.anandtech.com/show/6972/xbox-one-hardware-compared-to-playstation-4

Whooo specs! Thankyou.

Also holy shit that is bad. Which is good I guess, a current High-Mid range gaming PC should still be able to play new games in 5 years, thanks to devs needing to cater to shit hardware.

Though at this rate, MS should probably worry about integrated graphics being a competitor, AMD isn't going to sit on that tech, and if PCs start being universal gaming machines by default again, I doubt consoles will hold up well.

I clearly stated those specs ARE for the uninitiated, ie, the things printed on the box and or mass marketed, because that's what the XbO is.DjinnFor said:1. The fact that you think posting "tech specs" makes you look like one of the "initiated". Newsflash: the actual initiated know you're a poser. Come back to me when you've built a couple rigs and have experienced first hand how little those specs actually mean for actual performance.The Lugz said:i'll post a few simple tech specs for those un-initiated, and you can see the utter absurdity..

2. That you either a) didn't read the article or the press release at all, or b) didn't understand it. Apparently cutting edge architecture design is equivalent to "flying cars" and "holodecks for less than £1k". I guess the idea that a company that sells electronics, and another that designs the most widely used operating system in the world, might know a thing or two about good hardware design escapes you. And lets not even talk about how an integrated, compact, stable platform mass-manufactured and sold at cost might have some room for cost-efficiency advantages over a rig built by combining standardized, generalized, premium-priced modules.

it would be useless talking about how ati cores relate to nvidia cores and the new technology underlying the xbox soc system, what a raster engine does or how a polymorph engine works, how a 192 bit buss varys from a 384 bit bus or how gddr varys from ddr or how the von neumann architecture benefits from system on chip architecture when i've clearly stated my post is not intended for those with a master's degree in computing.

I might aswell be talking in binary. shall we try that?

01110011 01100101 01101100 01101100 01101001 01101110 01100111 00100000 01110100 01101000 01100101 00100000 01100100 01110010 01100101 01100001 01101101

so frankly, yes it's a basic overview but that's all you need to see the bald faced lies M$ is spinning.

the fact of the matter is,

I have no misconception about what the XbO is, it's a complicated mas-produced piece of consumer electronics and as a gaming device it will doubtless be fit for purpose obviously any system has advantages and disadvantages over any other, that's just life. it aught to be more efficient than running a bunch of modules in a heavily overclocked system purely because its SOC but that doesn't make it the 'next generation of computing' either, they clearly said it 'outguns' the highest end pc's which is still a plain lie. it doesn't have more of anything. it may operate more effectively but that isn't outgunning something.

now, their saving grace is the cloud distributed computing back-end design which may well make up the difference, especially in multiplayer games only time will tell but that isn't what they said.

lastly,

That's just the very definition of irony, my friendDjinnFor said:Come back to me when you've built a couple rigs and have experienced first hand how little those specs actually mean for actual performance.

essentially, you just did this.

http://www.escapistmagazine.com/articles/view/comics/critical-miss/10343

I agree. The pure specs usually don't measure up and yes, it will perform better than the set-up I mentioned but you can't really say this dude has even a grain of truth in his statement that the consoles will outperform the highest of the high-end. We're a long way away from any console outperforming something like dual GTX Titans (which is a viable set up for super-high end users).Lightknight said:snip

"systems-on-a -chip (soc) architecture that unleashes magnitudes more compute and graphics power than the current generation of consoles. These architectures are a generation ahead of the highest end PC on the market"

Right. So from his statement it seems that both AMD and Intel have already developed much more powerful and cheaper products but they are keeping them off the PC market because.... they hate money and they just want to help the little guys like Sony and Microsoft get a head start?

Guess it's just EA being EA once more...

Right. So from his statement it seems that both AMD and Intel have already developed much more powerful and cheaper products but they are keeping them off the PC market because.... they hate money and they just want to help the little guys like Sony and Microsoft get a head start?

Guess it's just EA being EA once more...

As it stands, there are clearly PCs more powerful. My discussion on optimization is just to explain why they're not low-end pc equivalents and are more mid-high than they'd be low-mid. My current home pc appears to be a bit better as well. Maybe a lot better but I'll wait and see.AlwaysPractical said:I agree. The pure specs usually don't measure up and yes, it will perform better than the set-up I mentioned but you can't really say this dude has even a grain of truth in his statement that the consoles will outperform the highest of the high-end. We're a long way away from any console outperforming something like dual GTX Titans (which is a viable set up for super-high end users).Lightknight said:snip

The are two sorts of benefit of the doubt we can give him:

1. If he's talking about the average home pc vs the specs of the console. That is the only way it could be a generation ahead of the pc.

2. This is the most likely, what he said was that the "electronics and an integrated systems-on-a -chip (soc)" architecture was a generation ahead of computers. Perhaps he's not talking about video cards or cpu but rather the way they're optimized. In which case it'd be correct.

But... eh... we don't know what he actually meant, do we? Maybe 3. He is announcing that he is leaving Microsoft to become a host on the Onion.

Hey, look at that. nVIDIA is releasing a newer, better graphics card.

And they'll continue doing so, well after the systems are released.

PC will always be one step ahead of the game, dude. However; pandering to the consoles has resulted in the downgrade of quality with PC games. Yeah, I like my consoles too, but, I would like to see the advantages of investing in a nice PC show with game developers, you know? There are some great Indie titles (I just got done playing FTL), but, Indie titles don't take full advantage of current hardware. They can't.

And they'll continue doing so, well after the systems are released.

PC will always be one step ahead of the game, dude. However; pandering to the consoles has resulted in the downgrade of quality with PC games. Yeah, I like my consoles too, but, I would like to see the advantages of investing in a nice PC show with game developers, you know? There are some great Indie titles (I just got done playing FTL), but, Indie titles don't take full advantage of current hardware. They can't.

Adam Jensen said:I thought that the GPU actually ships the image straight out now, it doesn't go back to the CPU. Well, at least not anymore. There's obviously the message to say 'i've sent the frame out', but the whole frame isn't copied back into system memory, it would simply take too long and take up too much RAM (imagine shipping 60 frames of 1920x1080 data into system RAM every second, it'd nom all the bandwidth) . It may have been that way in the past, of course, when GPUs were less advanced and frames smaller in size (also, you'll have to forgive me, my knowledge of PC architectures doesn't extend much further back than 2008 or so, when i really started getting interested in it all after getting my first laptop), but at least nowadays i don't think it's the case.The Comfy Chair said:A standard PC setup handles it like this:

1. CPU explicitly copies the data to GPU memory

2. GPU completes the computation

3. CPU explicitly copies the result back to the CPU

And here is how the PS4 unified architecture works:

1. CPU simply passes the point to the GPU

2. GPU completes the computation

3. CPU can read it instantly. There is no copying back to the CPU.

The only advantage the APU would have in that sense would be that the 'ok, the frame buffer can take another delivery of a frame to prepare' message would get there a bit faster.

Anandtech has an article which gives an overview of the graphics pipeline:

http://www.anandtech.com/show/6857/amd-stuttering-issues-driver-roadmap-fraps/2

Aside from the message back to the CPU to say 'moar information please', it's a one way street.

If you actually go and read the piece that he wrote, rather than the bastardized version that Chalk has foisted on The Escapist to drum up nerd rage, that is precisely what he means.Lightknight said:2. This is the most likely, what he said was that the "electronics and an integrated systems-on-a -chip (soc)" architecture was a generation ahead of computers. Perhaps he's not talking about video cards or cpu but rather the way they're optimized. In which case it'd be correct.

This whole affair is just cynical "journalists" selectively quoting someone to generate rage, and more importantly page hits. I'd say that they should be ashamed of themselves, but I've long since come to the conclusion that they're not capable of the emotion.

If that's the case then he was being entirely honest in that regard. Thanks for confirming.Raesvelg said:If you actually go and read the piece that he wrote, rather than the bastardized version that Chalk has foisted on The Escapist to drum up nerd rage, that is precisely what he means.Lightknight said:

2. This is the most likely, what he said was that the "electronics and an integrated systems-on-a -chip (soc)" architecture was a generation ahead of computers. Perhaps he's not talking about video cards or cpu but rather the way they're optimized. In which case it'd be correct.

This whole affair is just cynical "journalists" selectively quoting someone to generate rage, and more importantly page hits. I'd say that they should be ashamed of themselves, but I've long since come to the conclusion that they're not capable of the emotion.

Aside from the DDR5 RAM part which is expensive as hell and I don't think it's even manufactured yet to be sold (not in my country at least), the rest of the specs aren't even close to what a "high end PC is".

I would argue that my 3 year old PC is below what the next gen consoles can put out in terms of power: i5 processor, 8GB RAM, GTX 560Ti or so I think.

The rest of the PC gamers that do have high end machines surpasses this generation by a mile in terms of their configurations.

I would argue that my 3 year old PC is below what the next gen consoles can put out in terms of power: i5 processor, 8GB RAM, GTX 560Ti or so I think.

The rest of the PC gamers that do have high end machines surpasses this generation by a mile in terms of their configurations.

Hahahaha! Good one. XD

No, but in all seriousness, this claim is pretty dubious. Especially if you're going to talk about 'Raw Power'

Having said that, several well-known developers (and the hardware manufacturers) have pointed out in the past just how high the overhead on PC is.

For instance, the 360 can manage about 10 times more render calls than a PC ever could. - That doesn't translate into 10 times more performance. (it in fact means that you waste less CPU time getting the GPU to do work).

But it's not trivial.

The overhead on PC's is huge. Be that as it may, saying the new consoles outdo high end PC's is laughable. (And even if true now, it probably won't be remotely accurate even a year or two from now...)

No, but in all seriousness, this claim is pretty dubious. Especially if you're going to talk about 'Raw Power'

Having said that, several well-known developers (and the hardware manufacturers) have pointed out in the past just how high the overhead on PC is.

For instance, the 360 can manage about 10 times more render calls than a PC ever could. - That doesn't translate into 10 times more performance. (it in fact means that you waste less CPU time getting the GPU to do work).

But it's not trivial.

The overhead on PC's is huge. Be that as it may, saying the new consoles outdo high end PC's is laughable. (And even if true now, it probably won't be remotely accurate even a year or two from now...)