The graphics are fine current generation, i just want better more interesting games. Not the same games with nicer graphics. I want an epic WW2 shooter, not another corridor shooter.

Rumor: 360 Successor's GPU and Release Date Detailed

- Thread starter The Wooster

- Start date

The rumors about the WiiU using a Radeon card from the 5000 series (DX 10) have as much substantiation as these rumors. Of course we are discussing rumors, but since that is all we have, there is no harm doing analysis on them. And at the moment the rumors we have show that the WiiU will launch with GPU hardware one feature set behind that on the next xBox. This makes sense as the WiiU will launch sooner and follows Nintendo's recent trend of trying to be on the cheap side in console cost terms.Baresark said:Lot of funny comments on this thread.... It's stupid to compare the WiiU to anything, since no one knows what is actually in it. They built a system they believe will be comparable to the WiiU in power, but nothing is known for sure. Also, this is just a rumor anyway. Everyone is speculating on something we definitely don't know and things that "MAY" be true. No one has stated the WiiU is going to use DX10 at all. Or if they did, it was not a reliable source since Nintendo has literally released zero information regarding this component or the graphical capabilities of it. Nor would it make any sense for them to release something that didn't support DX11 since the cards that support it are now common place and inexpensive. And there is no reason a company would choose to use a card that only supports DX10 since it was complete shit.ph0b0s123 said:-snip-

I mean no insult and I love speculating as much as the next guy, but you should not speak so matter of fact about something that is completely unknown.

Edit: You can buy the first generation DX11 cards for $45. Just as an example of how inexpensive these cards are.

It is true that you may not have to upgrade, but you may have to to play new PC games at their best. Now consoles will have hardware feature parity with PC's for the foreseeable future, until DX12 comes out, it means it will be easier for ports to push PC's. Up until now we have been held back because consoles were 2 generations of graphics hardware features behind and not many wanted to program for the newer features when porting to the PC. So most of the advances we have had in the last few year on PC's were not used. Now the features will be used, even if to a small mount, this means it is going to be easy just to turn them up for the PC release rather than programing those features in when porting.Supernova1138 said:If this is true, I won't need to upgrade my graphics card for a very long time, as my single Radeon HD 6870 vastly outstrips the 6670's capabilities. Frankly, the 6870 should be the minimal baseline for a console upgrade. It can easily run most current titles at 1080p and deliver 60FPS. That allows for much more breathing space for future games, rather than the 6670 which is already considered a fairly weak gaming card today, it will be even weaker by 2013 standards.ph0b0s123 said:-snip-

I will say this to anyone who asks why not a higher end 6000 or 7000 GPU. That is easy, heat. Unless someone has heard something different, the console will be the same size as the current 360. There is no way you are going to cool one of the high end 6900 GPU's in that space. Not without de-clocking it so much that it would be about as fast as what they are rumored to be putting in. As far as 7000's, they have only just launched the two high-end GPU's, no way these would go in due to heat. Microsoft have no idea which of the lower end cards that will launch later this year, will fit their heat envelope. So if they want to finalize the design now they have to pick a GPU from the 6000 series.Frostbite3789 said:The 6670 is the low end gaming card for the AMD 6000 series.vrbtny said:-snip-

Couple that with the 7000 series was already released now.

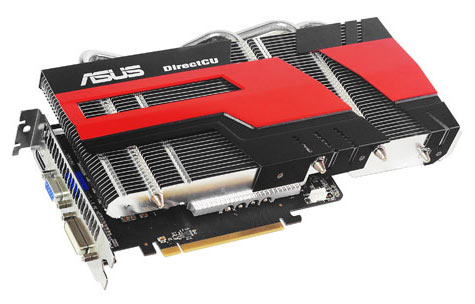

You may say why not go a bit higher in the 6000's, to say the 6750 (the next one up). Compare how much extra passive cooling you need for just that step. The 6770 is the highest you can do passive cooling with, which is console form factor territory. Compare the size of the heat sinks required and imagine how easy they would be to fit in a 306 size case.

Radeon 6670

Radeon 6750

Radeon 6770

What I really want to see something on is the CPU rather than the GPU, as everybody always says, it's about gameplay, not graphics. I want to see if they are going to give this console a decent CPU. It is the CPU that determines how clever some of you game mechanics can be, like having mechanics that rely on advanced AI, etc. It's is the CPU processing deficit that mean you only get 24 players in multi-player on console Battlefield's vs the 43 on PC's. If they have a decent CPU it means that they have more of a chance in bring in some revolutionary games mechanics changes.

I don't get it. I read this on another forum:

The ancient old 7800gt GPU in the Ps3 runs crysis 2 perfectly. Put that GPU in a PC and it will never even run it at all. The point is even slightly outdated hardware can be pushed FAR beyond anything it can ever do in a PC, 4-5 times faster at least.

My question is how can they do that? Is it hardware or software and why don't they use it on PCs? And how do they do it without melting the damn thing?

The ancient old 7800gt GPU in the Ps3 runs crysis 2 perfectly. Put that GPU in a PC and it will never even run it at all. The point is even slightly outdated hardware can be pushed FAR beyond anything it can ever do in a PC, 4-5 times faster at least.

My question is how can they do that? Is it hardware or software and why don't they use it on PCs? And how do they do it without melting the damn thing?

Can we please have a xbox thread without PC gamers crying out how they're superior like they have something to prove? I feel bad for you that you feel the need to point this stuff out.

They program 'direct to metal'. Rather than going through middle man API's like DirectX and OpenGL. They program to the specific hardware on the one GPU they have to think about, using lower level programing languages. This can result in significant efficiencies.EHKOS said:I don't get it. I read this on another forum:

The ancient old 7800gt GPU in the Ps3 runs crysis 2 perfectly. Put that GPU in a PC and it will never even run it at all. The point is even slightly outdated hardware can be pushed FAR beyond anything it can ever do in a PC, 4-5 times faster at least.

My question is how can they do that? Is it hardware or software and why don't they use it on PCs? And how do they do it without melting the damn thing?

Discussed here: http://www.techspot.com/news/42902-amd-directx-getting-in-the-way-of-pc-gaming-graphics.html

And here:http://www.bit-tech.net/hardware/graphics/2011/03/16/farewell-to-directx/2

The thing that I find constantly intriguing and just generally odd about how PCs and consoles stack up against each other is that while the PC versions always look prettier on top end pcs, the Xbox version always gets way WAY better performance than the equivalent PC hardware would get in that game.

Case in point: Skyrim.

Skyrim runs alright on my PC (with a 6770) running at medium to low settings. While on the 360, which has far less power than my PC, it runs just as well if not better. It doesn't look as pretty as on a top of the line pc, but in terms of smoothness and play-ability the 360 version and its crappy old GFX card that is so laughably old that I'd use it as a coaster does alright.

The point I'm making is that no matter what GPU they put in the new machine, it won't matter in real terms. Console developers know how to use the Xbox's GPU to get good results. Since they can dictate resolution and settings they can optimize it a whole lot better.

I don't mean they write more efficient code, I mean that whenever they do an effect, they can playtest it and if it judders they can re-do it a different way, or generally futz around and 'cheat' the effect in some other way, knowing that every Xbox will get the same results. So they put out a game that definitely will work pretty well on the platform. On the PC, you pretty much just have to take a guess at the right settings and tweak them as you play in order to get it to work properly, and unless you have an awesome PC or the game is based on a seriously old engine the chances of any game actually looking like the screenshots on the box are slim to none. There are plenty of games that even on minimum settings judder and stutter in busy scences on my PC when they run fine on ancient Xbox hardware.

I really wish that every xbox game that lands on the PC too had 'Xbox mode' where it will set it to the same settings as on the Xbox. That would radically expand the ability of cheap/old machines to run new awesome games at an acceptable standard.

Case in point: Skyrim.

Skyrim runs alright on my PC (with a 6770) running at medium to low settings. While on the 360, which has far less power than my PC, it runs just as well if not better. It doesn't look as pretty as on a top of the line pc, but in terms of smoothness and play-ability the 360 version and its crappy old GFX card that is so laughably old that I'd use it as a coaster does alright.

The point I'm making is that no matter what GPU they put in the new machine, it won't matter in real terms. Console developers know how to use the Xbox's GPU to get good results. Since they can dictate resolution and settings they can optimize it a whole lot better.

I don't mean they write more efficient code, I mean that whenever they do an effect, they can playtest it and if it judders they can re-do it a different way, or generally futz around and 'cheat' the effect in some other way, knowing that every Xbox will get the same results. So they put out a game that definitely will work pretty well on the platform. On the PC, you pretty much just have to take a guess at the right settings and tweak them as you play in order to get it to work properly, and unless you have an awesome PC or the game is based on a seriously old engine the chances of any game actually looking like the screenshots on the box are slim to none. There are plenty of games that even on minimum settings judder and stutter in busy scences on my PC when they run fine on ancient Xbox hardware.

I really wish that every xbox game that lands on the PC too had 'Xbox mode' where it will set it to the same settings as on the Xbox. That would radically expand the ability of cheap/old machines to run new awesome games at an acceptable standard.

I better start saving now. This is also good to know, because now I'll know when to stop buying Xbox Live. But if they are waiting for sales to slow, it could take a bit longer (which I don't mind at all).

I'm not much of a graphics person, but a boost (which is inevitable) would be good.

I'm not much of a graphics person, but a boost (which is inevitable) would be good.

You are completely correct. Just to correct you though, it's the 4000 series card that was rumored. The 5000 series was actually the first generation DX11 cards. I do look forward to seeing what comes of all of this. Part of me always wants to root for the underdog (Nintendo are always these days). But it will be nice to see gaming get another healthy jump. I am chiefly a PC gamers, but I always appreciated what was pulled out of these games on significantly lower spec equipment. It's all that stream lining.ph0b0s123 said:The rumors about the WiiU using a Radeon card from the 5000 series (DX 10) have as much substantiation as these rumors. Of course we are discussing rumors, but since that is all we have, there is no harm doing analysis on them. And at the moment the rumors we have show that the WiiU will launch with GPU hardware one feature set behind that on the next xBox. This makes sense as the WiiU will launch sooner and follows Nintendo's recent trend of trying to be on the cheap side in console cost terms.Baresark said:snipph0b0s123 said:-snip-

Don't forget the streamlined OS and how much better games tend to be programmed when only programming for a single hardware configuration. They will be able to pull graphics out of it that will match the PC as it stands pretty much now and into the future with this generation of cards. If you were to take any game that can run on the PC, turn the graphics up so high it's only going 20 FPS (which the card can do), figure in all that program stream lining and specialized hardware and you get top graphics at 30 FPS. Since that is all consoles do as it's played on a television. The only thing that stands kinda spotty now is tesselation. It's a big hit on a lot of systems, and if Rocksteady is any indication (they made Arkham City), it seems pretty tough to program at this point. But, we are also talking a year down the road and probably two years till release, at least.It is true that you may not have to upgrade, but now consoles will have hardware feature parity with PC's for the foreseeable future, until DX12 comes out, it means it will be easier for ports to push PC's. Up until now we have been held back because consoles were 2 generations of graphics hardware features behind and not many want to program for the newer features when porting to the PC. So most of the advances we have had in the last few year on PC's were not used. Now the features will be used, even if to a small mount, this means it is going to be easy just to turn them up for the PC release rather than programing those features in when porting.Supernova1138 said:If this is true, I won't need to upgrade my graphics card for a very long time, as my single Radeon HD 6870 vastly outstrips the 6670's capabilities. Frankly, the 6870 should be the minimal baseline for a console upgrade. It can easily run most current titles at 1080p and deliver 60FPS. That allows for much more breathing space for future games, rather than the 6670 which is already considered a fairly weak gaming card today, it will be even weaker by 2013 standards.ph0b0s123 said:-snip-

I think you may be onto something. It's true that they manage to pull a lot more performance out of these old consoles. Don't forget though: these companies get to program for constant hardware, where as for the PC they must program for a ridiculous amount of configurations. It's pretty much amazing they can make games look as good as they do on the console. It shows what the hardware, when properly pushed, can do.LostAlone said:The thing that I find constantly intriguing and just generally odd about how PCs and consoles stack up against each other is that while the PC versions always look prettier on top end pcs, the Xbox version always gets way WAY better performance than the equivalent PC hardware would get in that game.

Case in point: Skyrim.

Skyrim runs alright on my PC (with a 6770) running at medium to low settings. While on the 360, which has far less power than my PC, it runs just as well if not better. It doesn't look as pretty as on a top of the line pc, but in terms of smoothness and play-ability the 360 version and its crappy old GFX card that is so laughably old that I'd use it as a coaster does alright.

The point I'm making is that no matter what GPU they put in the new machine, it won't matter in real terms. Console developers know how to use the Xbox's GPU to get good results. Since they can dictate resolution and settings they can optimize it a whole lot better.

I don't mean they write more efficient code, I mean that whenever they do an effect, they can playtest it and if it judders they can re-do it a different way, or generally futz around and 'cheat' the effect in some other way, knowing that every Xbox will get the same results. So they put out a game that definitely will work pretty well on the platform. On the PC, you pretty much just have to take a guess at the right settings and tweak them as you play in order to get it to work properly, and unless you have an awesome PC or the game is based on a seriously old engine the chances of any game actually looking like the screenshots on the box are slim to none. There are plenty of games that even on minimum settings judder and stutter in busy scences on my PC when they run fine on ancient Xbox hardware.

I really wish that every xbox game that lands on the PC too had 'Xbox mode' where it will set it to the same settings as on the Xbox. That would radically expand the ability of cheap/old machines to run new awesome games at an acceptable standard.

I think it makes sense for companies to make monitors with two refresh rate settings, 30 and 60 Hz. If you can't run a game at 60 FPS, but you get 30-40 FPS, you simply put the refresh rate down to 30. Here is the trick with PC gaming versus console gaming. Consoles run at 30 FPS and PC's at 60 FPS. When you are running a framerate below 60 on the PC, it appears choppy. But if you could put it down to 30 FPS, then it would play smooth. This would be your 360 mode. It would be a simple change of monitor refresh rates.

ph0b0s123 said:They program 'direct to metal'. Rather than going through middle man API's like DirectX and OpenGL. They program to the specific hardware on the one GPU they have to think about, using lower level programing languages. This can result in significant efficiencies.EHKOS said:I don't get it. I read this on another forum:

The ancient old 7800gt GPU in the Ps3 runs crysis 2 perfectly. Put that GPU in a PC and it will never even run it at all. The point is even slightly outdated hardware can be pushed FAR beyond anything it can ever do in a PC, 4-5 times faster at least.

My question is how can they do that? Is it hardware or software and why don't they use it on PCs? And how do they do it without melting the damn thing?

Discussed here: http://www.techspot.com/news/42902-amd-directx-getting-in-the-way-of-pc-gaming-graphics.html

And here:http://www.bit-tech.net/hardware/graphics/2011/03/16/farewell-to-directx/2

Thank you kind sir, you are a gentleman and a scholar.

It's not overclocked, nor overheated. I ran the software to check if it overheats, and it was at a steady safe temperature, the fan is always free of clutter and dust and the driver is updated to the newest version. Point is, at 123.something it caused less issues then at 285.something. Figuresvrbtny said:That happens to my GTX 260 when I've overclocked it and it overheats. Update your driver and makes sure you're not overclocking/got a well ventilated laptop.

Hope it helps.

In the end, Nvidea is just... a pain.

But not as bad as Intel's integrated graphics numbering system.vrbtny said:To quote Jeremy Clarkson "POWER!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!"

Seriously though, it's good to see at least a half-decent GPU lined up for the next 360. Radeon cards aren't my strongpoint, however, they just have such a screwed up numbering system.

Even more screwed up that Nvidia's, and that's saying something.

Facepalm.... typo.Baresark said:Just to correct you though, it's the 4000 series card that was rumored.

I take it you are talking about how even if a 4000 card is put in Wii U, they will still be able to optimise's the code to hell? The problem for the Wii U is it will never be able to use DirectX 11 features like the rumored new Xbox because it's GPU won't have the hardware to support it and it stands no chance of effectively implementing said feature in software.Supernova1138 said:Don't forget the streamlined OS and how much better games tend to be programmed when only programming for a single hardware configuration. They will be able to pull graphics out of it that will match the PC as it stands pretty much now and into the future with this generation of cards. If you were to take any game that can run on the PC, turn the graphics up so high it's only going 20 FPS (which the card can do), figure in all that program stream lining and specialized hardware and you get top graphics at 30 FPS. Since that is all consoles do as it's played on a television. The only thing that stands kinda spotty now is tesselation. It's a big hit on a lot of systems, and if Rocksteady is any indication (they made Arkham City), it seems pretty tough to program at this point. But, we are also talking a year down the road and probably two years till release, at least.

It will miss out on obviously tessellation and shader model 5 at minimum. There are other things like DOF, and some lighting etc which is DX11 hardware only.

I don't know by tessellation being a big hit. Batman was bad because they screwed the DX 11 implementation, that had nothing to do with tessellation, as the game was slow even if tesselation was turned off when still using the DX 11 code path, it has mostly been patched now. For other games I never come across turning tessellation off saving much. This could be because I have Nvidia cards, and Nvidia bet big on tessellation hardware in their cards, where as AMD did not. So AMD cards tend to perform worse if lots of tessellation is needed.

Shouldn't console gamers be demanding more? Any pc gamer who knows hardware is going to laugh at this. Using a low-mid end gpu for a next generation console that is likely to see another 10 year life cycle? I know heat is probably a factor, but this is not impressive at all. Console gamers should be disappointed to be honest.I-Protest-I said:Can we please have a xbox thread without PC gamers crying out how they're superior like they have something to prove? I feel bad for you that you feel the need to point this stuff out.

Just remember everyone, none of this power matters if you put it in an overheating tin can.

360 anyone? sound familiar? red ring? anyone hear of that one? hehhh? I FEEL OLD

360 anyone? sound familiar? red ring? anyone hear of that one? hehhh? I FEEL OLD

I would never say that with the streamlined systems a non DX11 card could have DX11 features. I use Nvidia cards as well, the only cards I have ever had fail on me were ATI cards (2 separate times). The only time I stop using an Nvidia card is when I upgrade. They will be able to optimize the code to hell, but not insert functionality that isn't there. I was more stating that what can be pushed out of old hardware is much better realized on a console than on a PC because of the single configuration. There are also plenty of games on that hardware that are complete shit though. A company that is willing to put in the time can get a great looking game.ph0b0s123 said:Facepalm.... typo.Baresark said:Just to correct you though, it's the 4000 series card that was rumored.

I take it you are talking about how even if a 4000 card is put in Wii U, they will still be able to optimise's the code to hell? The problem for the Wii U is it will never be able to use DirectX 11 features like the rumored new Xbox because it's GPU won't have the hardware to support it and it stands no chance of effectively implementing said feature in software.Supernova1138 said:Don't forget the streamlined OS and how much better games tend to be programmed when only programming for a single hardware configuration. They will be able to pull graphics out of it that will match the PC as it stands pretty much now and into the future with this generation of cards. If you were to take any game that can run on the PC, turn the graphics up so high it's only going 20 FPS (which the card can do), figure in all that program stream lining and specialized hardware and you get top graphics at 30 FPS. Since that is all consoles do as it's played on a television. The only thing that stands kinda spotty now is tesselation. It's a big hit on a lot of systems, and if Rocksteady is any indication (they made Arkham City), it seems pretty tough to program at this point. But, we are also talking a year down the road and probably two years till release, at least.

It will miss out on obviously tessellation and shader model 5 at minimum. There are other things like DOF, and some lighting etc which is DX11 hardware only.

I don't know by tessellation being a big hit. Batman was bad because they screwed the DX 11 implementation, that had nothing to do with tessellation, as the game was slow even if tesselation was turned off when still using the DX 11 code path, it has mostly been patched now. For other games I never come across turning tessellation off saving much. This could be because I have Nvidia cards, and Nvidia bet big on tessellation hardware in their cards, where as AMD did not. So AMD cards tend to perform worse if lots of tessellation is needed.

I had a brain fart, Batman's issue was the PhysX. That was completely messed up. If you shut that off the game ran great. But if you put Tesselation on in it, you barely saw any difference what so ever. All the games that use Tesselation have had negligible graphic increases though. Not that DX11 wasn't a huge leap over DX9 and DX10. But, I digress.

PC gamers are a different crowd. We can upgrade individual components and do it piece by piece. Since the 360 came out, I have built myself no less than 3 rigs and invested a lot more money into it than any 360 owner. My point is that if a system costs $1000, no one will buy it. They could easily make a system for that much that would compete with today's cutting edge. But by the time the price became affordable for everyone, it would still be completely obsolete. So, they try to make the best measurement they can with dynamic of cost vs capabilities. They learned on the last generation that a lot of people are not going to pay $600 for a new console. They also actively see that as a console becomes cheaper, more people buy it. The more people buy it, the more likely you are to have better third party support. The more games a system offers, the more likely that people will buy it. And it repeats from there. People can expect more, but they wouldn't be running to the shelves to pick up the next generation of consoles if it was out of their price range.koroem said:Shouldn't console gamers be demanding more? Any pc gamer who knows hardware is going to laugh at this. Using a low-mid end gpu for a next generation console that is likely to see another 10 year life cycle? I know heat is probably a factor, but this is not impressive at all. Console gamers should be disappointed to be honest.I-Protest-I said:Can we please have a xbox thread without PC gamers crying out how they're superior like they have something to prove? I feel bad for you that you feel the need to point this stuff out.

Haha I read that in his voice.vrbtny said:To quote Jeremy Clarkson "POWER!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!"

Seriously though, it's good to see at least a half-decent GPU lined up for the next 360. Radeon cards aren't my strongpoint, however, they just have such a screwed up numbering system.

Even more screwed up that Nvidia's, and that's saying something.

No one is crying superiority of themselves. What people are pointing out is that is a low-mid end piece of technology that is inferior to other technology already on the market. PC v Console the only time you can get called an elitist for saying 1 piece is more powerful/technologically advanced that another one.I-Protest-I said:Can we please have a xbox thread without PC gamers crying out how they're superior like they have something to prove? I feel bad for you that you feel the need to point this stuff out.