Star Wars Jedi: Survivor launched yesterday on PC and at this point in time, it's a technical failure on just about every level. This is somewhat hard to believe as all of the issues Fallen Order possessed return for the sequel, despite their staggeringly obvious nature. Shader compilation stutter and traversal stutter are back, while the in-game options offer no recourse in addressing the game's key issues. In terms of polish, performance and accessibility, this is actually far worse than Fallen Order, perhaps taking the cake as the worst triple-A PC port of 2023, despite some remarkably strong competition.

The game's initial handshake with the player fails completely in improving over its predecessor. The options are barebone UE4 standards, right down to their naming. There's zero context given to the user as to what the performance and visual ramifications are for each setting, leaving the player with no hints as to how to improve the experience for their specific hardware. There's also a litany of issues that get in the way of you figuring out whether options improve performance at all: for example, toggling ray tracing from on to off then back on again can make the game run worse than it initially did with RT on. The only way to fix it is a full game restart after which performance returns to the level it was at earlier.

There are issues with other options as well. For example, you only have access to TAA and FSR2 – and FSR2 is just not a good option for acceptable image quality. Essentially, whenever an object or the camera moves, FSR2 causes a blurred pixelisation effect at all quality levels. This applies to the entire image and all aspects of it, giving the game a motion blurred, ghost-like look - even if you turn motion blur off. Unreal Engine 4 has excellent DLSS and XeSS plug-in support. The developers have chosen to ignore them in a world where each provides improved quality for Nvidia and Intel GPU owners respectively - and that's unacceptable.

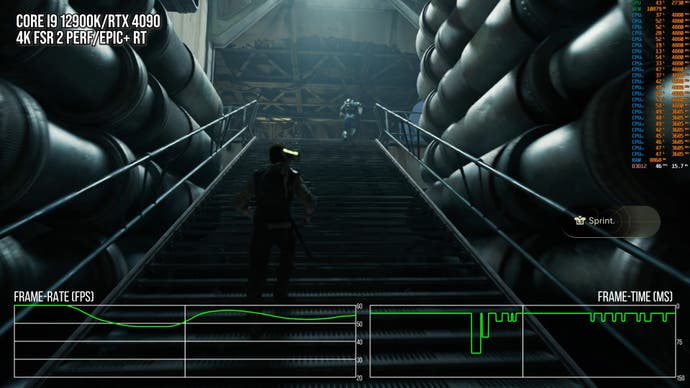

All of this is borderline insignificant, however, compared to the PC port's other issues. Shader compilation stutter is back - despite the game kicking off with a precompilation pass. The infamous traversal stutter in Fallen Order that everyone noticed and complained about? It's back again in Survivor. We've raised enough awareness around #StutterStruggle at this point that it's borderline inconceivable that a major triple-A title should still release with this problem, but there it is. Traversal stutter continues to marr the Star Wars experience: everywhere you go in Star Wars Jedi: Survivor sees spikes of frame-time over a number of frames or even over a few seconds as you cross invisible boundaries in the game world.

This happens everywhere, no matter what area of the game you are in and no matter what CPU or GPU combination you have. Even areas that look innocuous and seem like they should be easy to load and render induce traversal load stutter. The frame-times spikes of these stutters can be smaller, but they can also be large and they can compound with any shader compilation stutter that might occur potentially at the same time. No matter how many times you play the game or run across an area, you will always see the same stutter - and nothing can prevent this.

Shader compilation stutter returns (left) and the traversal stutter issue from Fallen Order also raises its ugly head in Survivor. 100ms stutters on a powerful processor like a Core i9 12900K, backed with 6400MT/s DDR5 is excessively poor. The results will be poorer still on mainstream CPUs.

That is not an exaggeration. Even playing the game at the lowest settings possible you will still see traversal and shader compilation stutter, even on the most powerful CPUs and GPUs on the market. The latest 3D cache processors from AMD deliver the 'best' experience, but even these are subject to noticeable stutter.

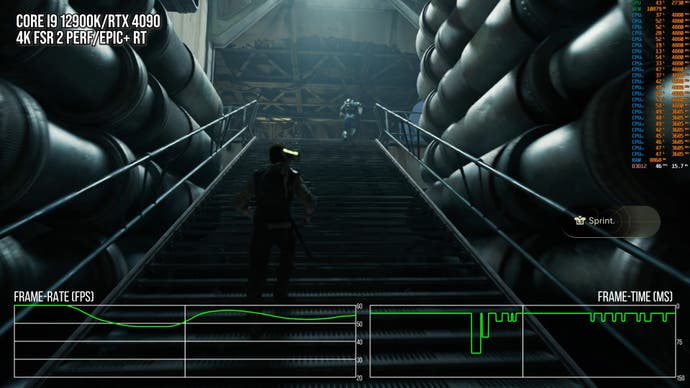

So far, so Fallen Order, but Survivor is also blighted by astonishingly low CPU core utilisation. A Core i9 12900K paired with fast 6400MT/s DDR5 and an RTX 4090 will not maintain 60 frames per second. This in itself is not exactly something to cry about though from a rational perspective – hardware never guarantees that you should be able to run the game at X setting and at X frame-rate. But here, the performance at the absolute lowest settings is completely indefensible and just flat-out bad. Barely any of the processor is touched, performance held back by around two threads, which are always pegged at 70 percent utilisation or higher.

In short, Star Wars Jedi: Survivor is essentially ignoring the fact that CPUs have entered the many-core era. With higher settings it is even more disastrous – with ray tracing active, more smaller cores are tasked with maintaining RT's BVH structures, but ultimately, performance drops still further to the point where I've observed CPU-limited scenes on a 12900K that just about exceed 30fps. On a mid-range CPU like the Ryzen 5 3600, for example, it is even more catastrophic.

On the image on the left, the game running on an RTX 4090 on lowest settings barely touches GPU resources and still can't maintain 60fps. On the right, fully maxed with RT at 4K FSR2, this scene sees only 36% GPU utilisation - meaning that the CPU bottleneck is bringing performance down to 36fps.

Settings recommendations are therefore impossible, really, although you can disable ray tracing to claw back some performance. Basically, Star Wars Jedi: Survivor is not utilising the hardware presented to it at all in a meaningful way. I still can't get my head around the fact that one of the best games of 2019 launched with profound issues on PC that were never fixed - and then we see them again in the 2023 sequel, on top of a host of other issues.

Yes, there are patches on the way, while EA has also offered up a hollow mea culpa that actually seems to be trying to shift the blame to the user's hardware, but none of this addresses the fact that the PC version of a very handsome game has launched in a totally unacceptable state.

We were towards the back of the queue to receive Jedi Survivor code. The PC version arrived on Thursday with consoles on Friday, so further coverage is still in development - but what we should stress is that while the PC version is terrible, this is definitely a game with strong current-gen credentials - and from a visual perspective, there's much to commend it (and we have a full tech review in the works that covers this and more). It's early days but the console versions do seem have far fewer technical issues, even though performance still requires a lot of work. We'll be reporting back on that front as soon as we can.

gameranx.com

gameranx.com